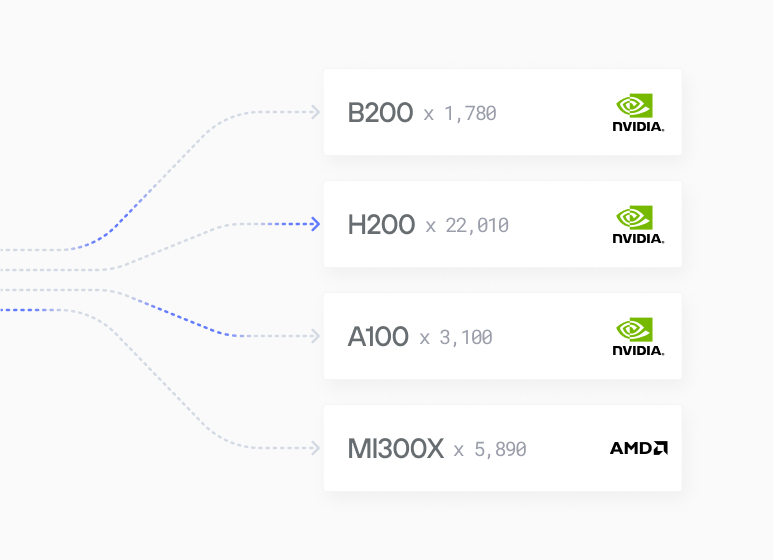

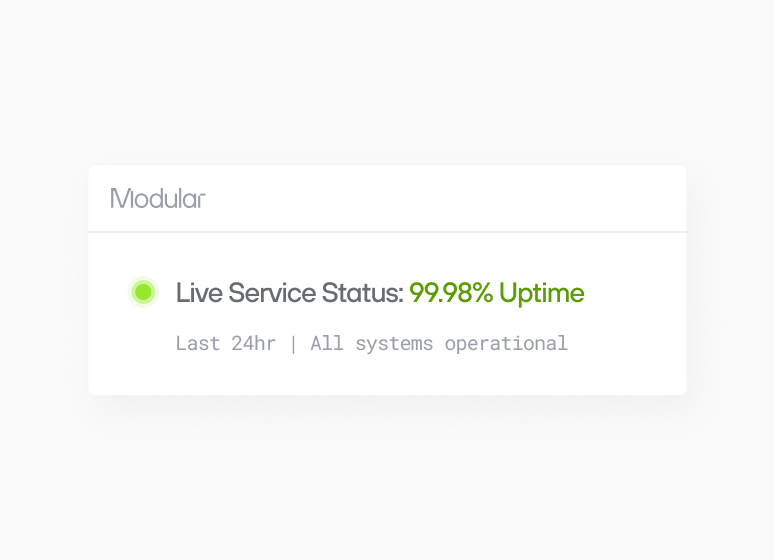

AI inference has a cost problem. Hardware alone isn't enough - customers need software that can extract every ounce of performance from these chips. TensorWave and Modular team up to shatter the cost-performance ceiling for AI inference.

Partners

About TensorWave

Case Studies

Scales for enterprises

Get started guide

Install MAX with a few commands and deploy a GenAI model locally.

Read Guide

Browse open models

500+ models, many optimized for lightning-fast performance

Browse models