Infinite scale made easy for the largest AI workloads

Mammoth is our kubernetes-native cloud technology powers our deployment, scale and management GenAI applications.

Where Mammoth stands out

Any cloud, any model

We’re built from the ground up to work with any technology. Check out our model library, and if you don’t see your model, let us know, and we’ll optimize it right away. No matter what cloud, or what model. we’re built for the future and any industry changes that come - and they will!

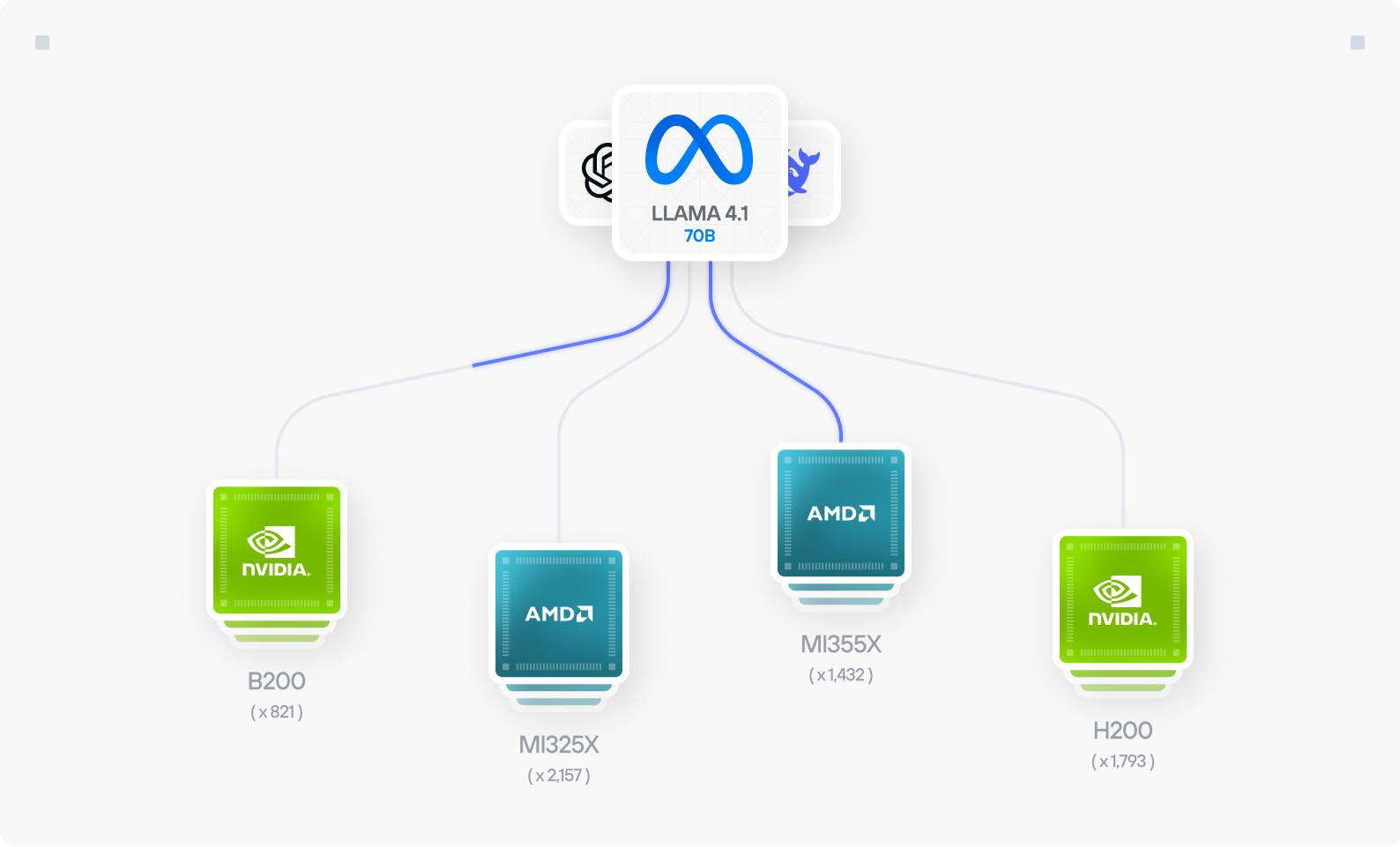

Hardware Portability

Modular is the only platform built from the ground up for the future of Generative AI portability. Today we deploy seamlessly to NVIDIA, AMD, and CPUs. Whatever tomorrow brings, we’ll be the firs tto support it.

Disaggregated inference

By separating the LLM’s inference phases and providing each phase with dedicated resources, we have improved performance and scalability. If reducing latency is your goal, we’ve got you covered.

Intelligent routing

Smart routing at scale

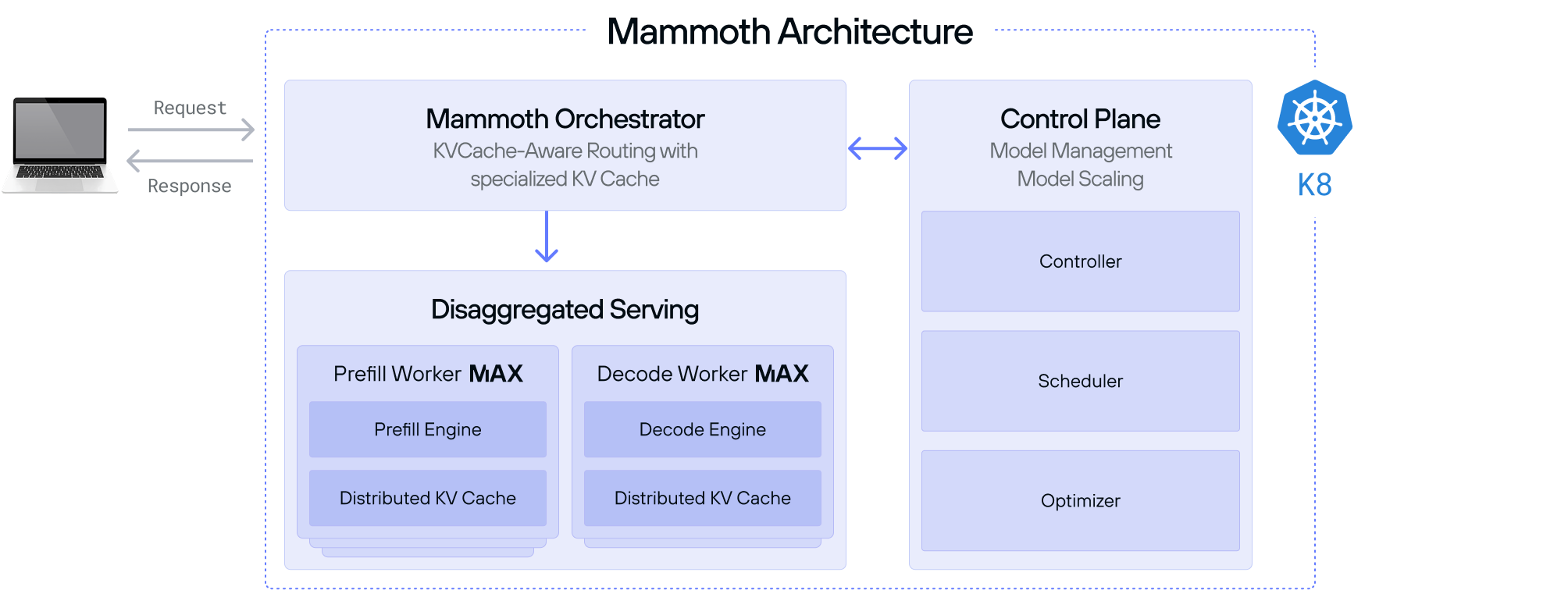

Mammoth consists of a lightweight control plane, intelligent router, and disaggregated serving backends, working together to efficiently deploy and run models across diverse hardware environments.

Components of mammoth

Intelligent model placement

Mammoth's built-in router intelligently distributes traffic, taking into account hardware load, GPU memory, and caching states. Deploy and serve multiple models simultaneously across different hardware without complexity.

Mammoth orchestrator

Rather than simply forwarding requests to the next available worker, the orchestrator uses configurable routing strategies to intelligently direct traffic.

Performance optimization at every level

Through disaggregated inference, we have done the work to separate the model’s inference phases and optimized performance at every step.

“What @modular is doing with Mojo and the MaxPlatform is a completely different ballgame.”

"Mojo is Python++. It will be, when complete, a strict superset of the Python language. But it also has additional functionality so we can write high performance code that takes advantage of modern accelerators."

"Mojo gives me the feeling of superpowers. I did not expect it to outperform a well-known solution like llama.cpp."

“The more I benchmark, the more impressed I am with the MAX Engine.”

“It’s fast which is awesome. And it’s easy. It’s not CUDA programming...easy to optimize.”

“Tired of the two language problem. I have one foot in the ML world and one foot in the geospatial world, and both struggle with the 'two-language' problem. Having Mojo - as one language all the way through is be awesome.”

“Max installation on Mac M2 and running llama3 in (q6_k and q4_k) was a breeze! Thank you Modular team!”

“A few weeks ago, I started learning Mojo 🔥 and MAX. Mojo has the potential to take over AI development. It's Python++. Simple to learn, and extremely fast.”

"It worked like a charm, with impressive speed. Now my version is about twice as fast as Julia's (7 ms vs. 12 ms for a 10 million vector; 7 ms on the playground. I guess on my computer, it might be even faster). Amazing."

“Mojo and the MAX Graph API are the surest bet for longterm multi-arch future-substrate NN compilation”

“The Community is incredible and so supportive. It’s awesome to be part of.”

“Mojo destroys Python in speed. 12x faster without even trying. The future is bright!”

“I tried MAX builds last night, impressive indeed. I couldn't believe what I was seeing... performance is insane.”

“I'm excited, you're excited, everyone is excited to see what's new in Mojo and MAX and the amazing achievements of the team at Modular.”

"C is known for being as fast as assembly, but when we implemented the same logic on Mojo and used some of the out-of-the-box features, it showed a huge increase in performance... It was amazing."

Mojo destroys Python in speed. 12x faster without even trying. The future is bright!

"after wrestling with CUDA drivers for years, it felt surprisingly… smooth. No, really: for once I wasn’t battling obscure libstdc++ errors at midnight or re-compiling kernels to coax out speed. Instead, I got a peek at writing almost-Pythonic code that compiles down to something that actually flies on the GPU."

“Mojo can replace the C programs too. It works across the stack. It’s not glue code. It’s the whole ecosystem.”

“I'm very excited to see this coming together and what it represents, not just for MAX, but my hope for what it could also mean for the broader ecosystem that mojo could interact with.”

"This is about unlocking freedom for devs like me, no more vendor traps or rewrites, just pure iteration power. As someone working on challenging ML problems, this is a big thing."

Get started guide

Install MAX with a few commands and deploy a GenAI model locally.

Read Guide

Browse open models

500+ models, many optimized for lightning-fast performance

Browse models