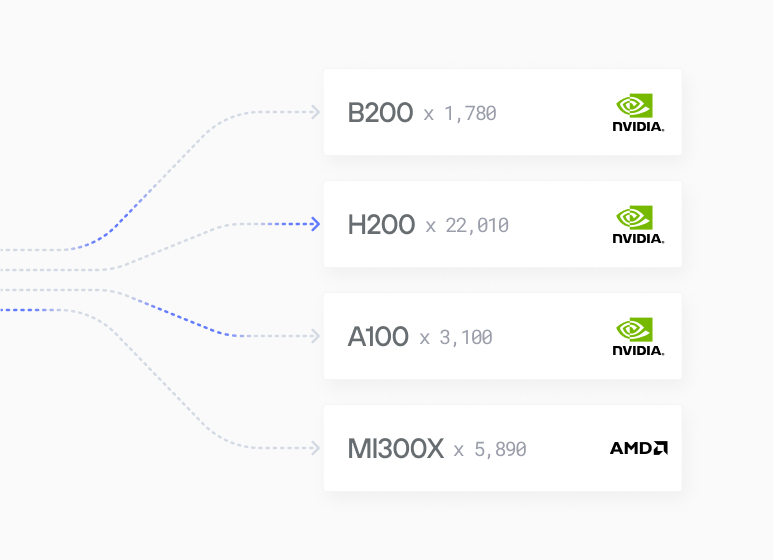

Modular allows Qwerky AI to do advanced AI research, to write optimized code and deploy across NVIDIA, AMD, and other types of silicon.

50%

Faster GPU Kernels

3x

Research Velocity

1

Novel Architecture

"Modular allows Qwerky to write our optimized code and confidently deploy our solution across NVIDIA and AMD solutions without the massive overhead of re-writing native code for each system."

Problem

Qwerky AI is building a novel process of optimizing large language models (LLMs) and text-to-speech (TTS) models to allow them to run across different types of compute. Their system enables cost-effective deployment of larger models on existing systems, including NVIDIA and AMD, with the goal of making personality-rich AI accessible on commodity hardware.

Before partnering with Modular, Qwerky was frustrated by existing AI infrastructure solutions that created significant barriers to their vision. Traditional frameworks like PyTorch and TensorFlow proved inefficient for their custom Mamba-based architectures, consuming excessive memory and compute for their personality modeling requirements. Writing custom kernels in CUDA was prohibitively complex and time-consuming, while attempting to port those optimizations to ROCm for AMD GPUs required complete rewrites with different toolchains and APIs. Most critically, other infrastructure providers forced them to choose between platforms—their models could run well on NVIDIA or AMD, but never all three with consistent performance. This fragmentation threatened Qwerky's core mission of building truly accessible, hardware-agnostic AI that could deliver authentic conversational experiences regardless of the user's device.

The need for a unified platform that could deliver efficient custom kernels across all major hardware platforms while supporting novel architectures became essential to Qwerky's success.

Solution

The Modular Platform allows customization at every level, from new model architectures to custom, hardware-agnostic kernels within those models. This is essential for the deployment of Qwerky AI's custom Mamba model, which powers their personality-driven AI agents with efficient long-context understanding and authentic conversational abilities.

Our MAX graph API is uniquely suited for Qwerky's Mamba implementation because it provides the flexibility to optimize state-space models' selective scan operations while maintaining the nuanced personality modeling that makes their agents feel genuinely human.

Our platform's ability to compile custom GPU kernels written in Mojo enables Qwerky to accelerate Mamba's linear-time complexity for conversation history while preserving the model's ability to capture subtle personality traits and contextual awareness. Modular is working hand-in-hand with the Qwerky team to optimize their custom Mamba architecture across diverse AI hardware like 4xH100, 2xH200, MI300X—aligning perfectly with QWERKY's commitment to eco-friendly, hardware-agnostic AI solutions.

Results

Modular's platform, with MAX and the Mojo language, provide Qwerky with an elegantly simple solution for deploying their custom Mamba models across NVIDIA and AMD without the complexity of traditional AI infrastructure. With MAX's graph APIs, Qwerky can define their entire model architecture through clean Python interfaces and seamlessly integrate custom GPU kernels without complex build systems, linkers, or hardware-specific compilers.

The simplicity is transformative: where writing a custom kernel in CUDA might require hundreds of lines of intricate memory management code, the equivalent kernel written in Mojo is often just 20-30 lines of readable, Python-like code that automatically handles optimal memory patterns across different hardware. Qwerky's engineers can write a selective scan operation for their Mamba model once in Mojo and have it automatically optimized for NVIDIA tensor cores and AMD matrix engines without any additional code changes.

Most importantly, MAX's unified compilation pipeline means Qwerky's small team can iterate on model improvements in hours instead of weeks. They simply modify their Mojo kernels, test locally on any available hardware, and deploy the exact same code to production across their entire heterogeneous infrastructure. This development velocity—combined with the MAX inference server’s ability to deliver near-metal performance—allows Qwerky to continuously enhance their personality modeling capabilities while maintaining the economics that make their human-centered AI accessible to everyone.

About Qwerky AI

Qwerky is building a proprietary system of "distilling" models that significantly lowers either the system memory usage or the TOPS usage—sometimes both. This improvement is utilized to allow lower-cost deployment of models, facilitate deploying larger models to commodity hardware, and focus freed resources toward other novel AI improvements, like a creativity-enhancing inference engine also being prototyped at Qwerky.

Our team has over 15 years of experience in the machine learning and artificial intelligence industry and has a history of bringing these big ideas to market.

Case Studies

Scales for enterprises

Get started guide

Install MAX with a few commands and deploy a GenAI model locally.

Read Guide

Browse open models

500+ models, many optimized for lightning-fast performance

Browse models