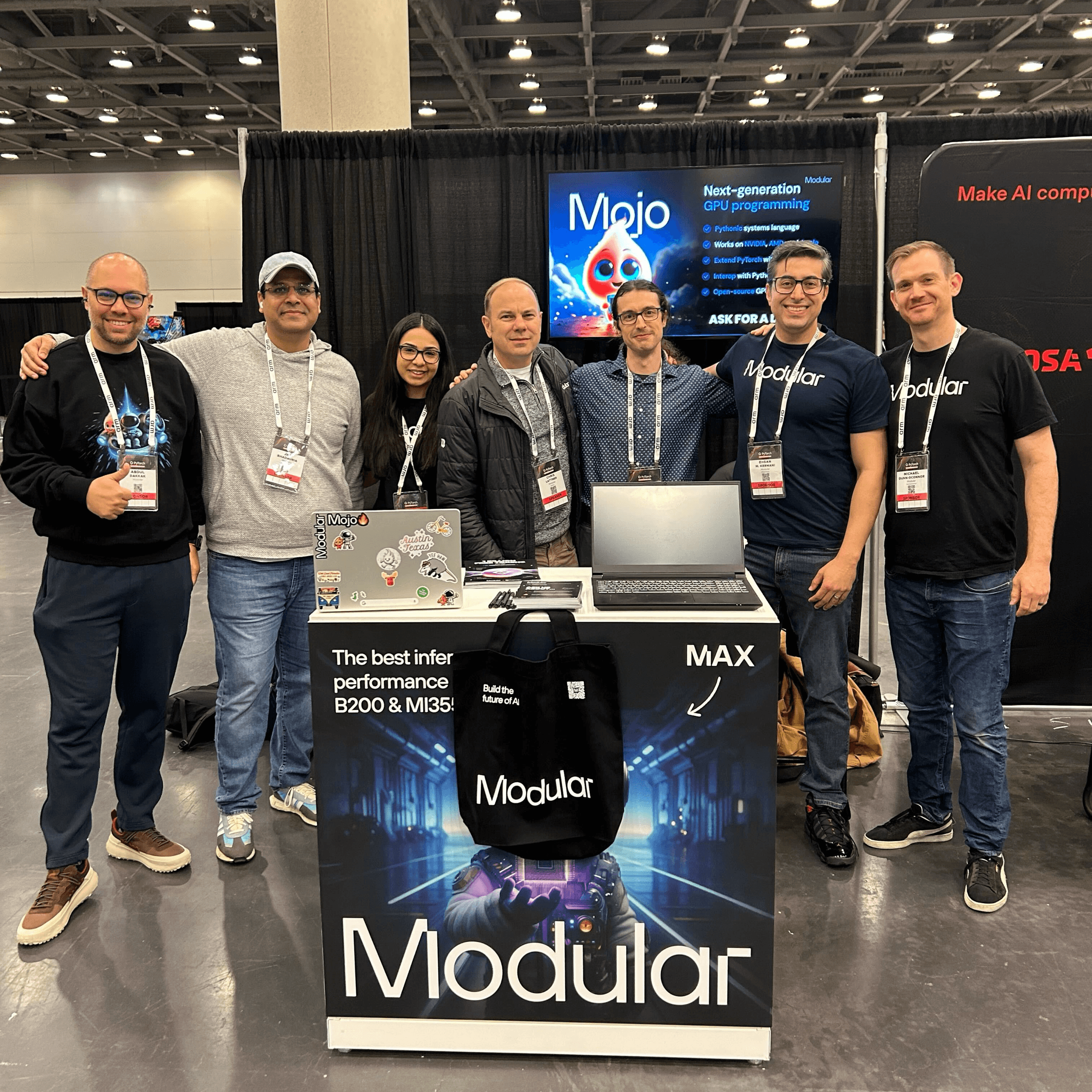

Along with several teammates, I had the privilege of attending two recent developer events in the AI software stack: PyTorch Conference 2025 (October 22-23) in San Francisco and LLVM Developers' Meeting (October 28-29) in Santa Clara. In this post, I’ll share some observations that stood out among all the conference sessions and conversations I had with developers.

These events serve vastly different communities — PyTorch at the AI application layer, and LLVM at the compiler infrastructure layer. Yet I observed striking convergence: both communities are grappling with the same fundamental infrastructure challenges as AI innovation accelerates.

PyTorch Conference: an unknown developer future

My most memorable moment at PyTorch came after showing one of our demos to a computer science student. I asked what questions she had, expecting something about performance comparisons or compiler logic. Instead, she described the many challenges she faced developing kernels for a research project. Like many developers at PyTorch, she was most comfortable writing Python and found the number and complexity of languages and frameworks needed for kernel optimization overwhelming. She asked: "How can I learn this? Where do I start?"

As an educator, I love hearing these questions. But her story captured an uncertainty I sensed throughout the talks and across the expo hall. No one wants to fall behind, yet there are so many languages and frameworks for different stages of the research-to-production journey. The current ecosystem requires integrating disparate tools and ensuring they work efficiently together—a path that feels increasingly inadequate for a future defined by hardware diversity and rapid model evolution.

With the industry changing so rapidly, many attendees weren't discussing what AI will be doing in a few years—but rather what they will be doing in AI. This focus on the human experience of AI development extended throughout panels and talks.

When Mark Saroufim presented Backend Bench, he asked, "What if we could get LLMs to write all of these ops to quickly bring up a new backend?" When describing Helion ("PyTorch with tiles," as he called it), he emphasized how easy it is for LLMs to program in Helion. Across these topics, Saroufim painted a picture of a future where LLMs generate kernels and autotune them to efficient performance.

In Jeremy Howard's keynote conversation with Anna Tong, he discussed what he called "the opposite of vibe coding"—using AI to improve your capabilities and learn new things, rather than automating away complexity. Many open questions remain about what LLMs can do in AI development and what work developers actually want to hand off to an LLM.

Attendees at our booth had similar questions about using LLMs to write kernels in Mojo. This was clearly on many people's minds—how do you make repetitive work more efficient without sacrificing the learning that helps you solve harder problems tomorrow? More than the current state of code assistants, people were wondering what AI engineering will look like in a few years.

Hardware diversity

The uncertainty and opportunity in the AI world was equally clear on the hardware side. AI data centers experienced massive growth in 2025, and demand for hardware shows no signs of slowing. The conference reflected this reality, with hardware vendors of all sizes competing for market share.

Many talks focused on CUDA, confirming that NVIDIA has a deep software moat. Yet hardware diversity is growing rapidly in both data centers and edge devices. Other players are making significant investments—from traditional vendors like AMD, Intel, and Qualcomm, to big tech companies like Google (TPUs) and AWS (Trainium), to accelerator startups like Groq, Rebellions, Tenstorrent, and Furiosa (our booth neighbors).

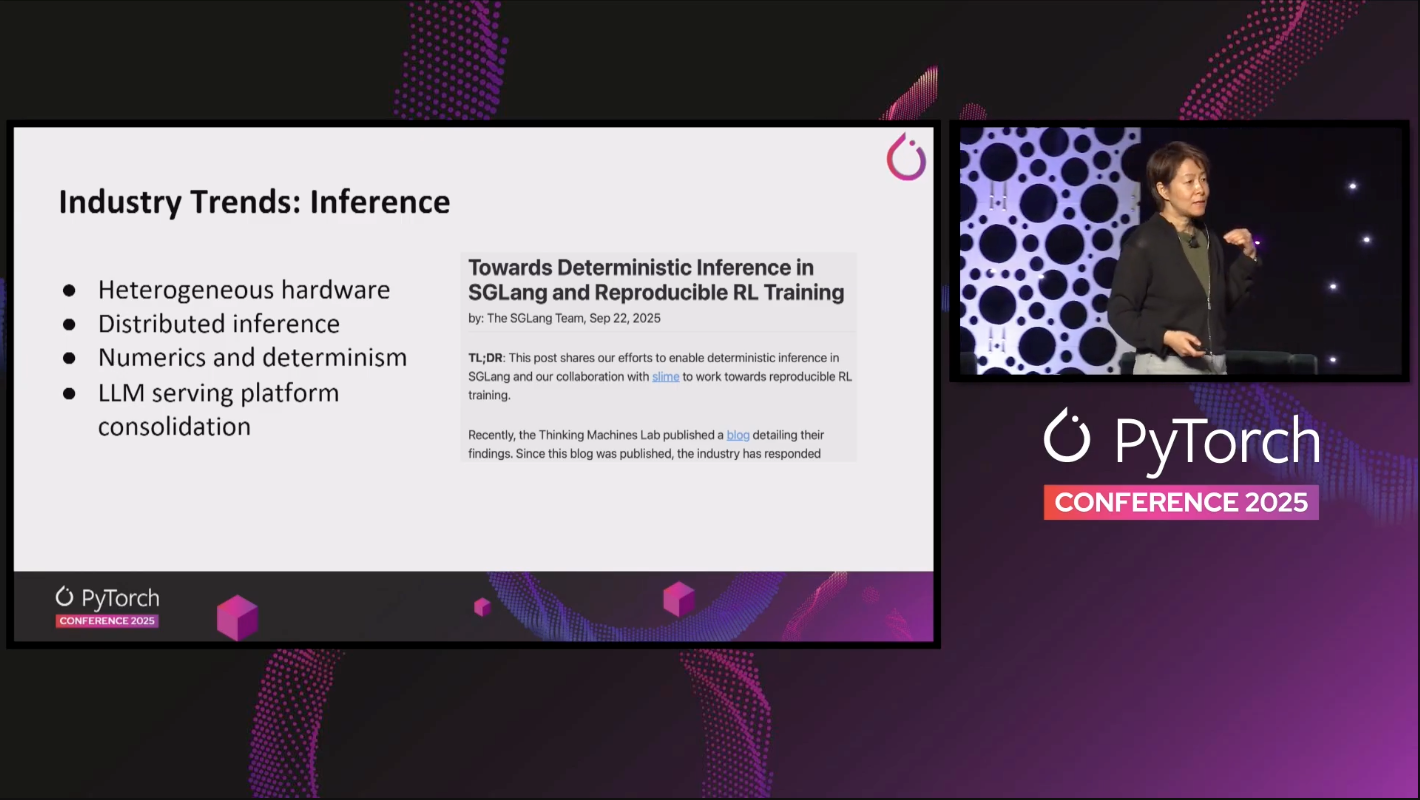

Developers face significant challenges keeping pace with architectural changes across hardware generations and managing diverse hardware in their current stack. In the keynote technical deep dive, Meta's Peng Wu highlighted heterogeneous hardware as the leading industry trend in AI inference. Several presenters and attendees referenced Anthropic's postmortem of three recent issues, which describes bugs that manifested different symptoms across platforms at different rates. Anthropic's refreshingly candid postmortem clearly resonated with developers who have felt hardware changes outpace their ability to evaluate and debug performance issues.

Achieving peak performance on modern GPUs requires deep, specialized knowledge of hardware-specific features that change with every hardware generation. Developers often must drop into CUDA C++ or even lower-level PTX assembly to unlock performance benefits from new hardware, making kernel authoring a bottleneck that only expert engineers can navigate.

Meanwhile, other teams are working toward a compiler-first future where tools like PyTorch Dynamo, TorchInductor, and compiler dialects like TileIR automatically generate kernels from higher-level abstractions. (Our blog says more about AI compilers here).

As an alternative, Spenser Bauman presented a talk called Mojo + PyTorch: A Simpler, Faster Path To Custom Kernels, explaining how to write GPU kernels in Mojo (a Python-style language) that are both high-performance and hardware-agnostic. Nearly every developer I spoke to about kernel development mentioned the same objectives.

Developers want high-performance inference across a diverse and ever-changing hardware stack—but not at the cost of developer experience. If they were going to change their process, they wanted a single language that would meet their needs for both portability and precise control. They also wanted to write code that would last longer than a single hardware generation. It was refreshing to talk with so many folks who met industry uncertainty with an open mind and enthusiasm for learning something new.

LLVM Developers' Meeting: how to build for diverse hardware

The following week, I attended the LLVM Developers' Meeting.

While the conference covered a wide variety of compiler topics—from optimizing C loops to mitigating hardware vulnerabilities—several sessions showed how the LLVM community is facing the same hardware diversity challenges as the PyTorch community.

This year's LLVM Developers' Meeting featured a recurring focus on MLIR—an open-source compiler framework uniquely designed to build compilers for diverse hardware. MLIR appeared throughout the event, from training workshops to sessions on dialects, pattern rewrites, crash reproducers, and even Python DSLs for MLIR programmability.

The LLVM community continues adopting MLIR to build robust support for the explosion of hardware targets—CPUs, GPUs, TPUs, and other AI accelerators—without fragmenting their tools or creating maintenance nightmares. The Modular team also presented talks about our experience with MLIR, which forms the foundation of both the Mojo programming language and the MAX graph compiler (our slides are linked below).

Thinking in decades, not years

Both conferences centered on open-source tools, so it's no surprise that open-source dominated discussions about AI's future. Meta's Alban Desmaison celebrated PyTorch's 9th birthday and highlighted the growing scale of GitHub contributions. Jeremy Howard called open-source ”the right way for the global community to advance our software capabilities” and made reference to the outsized impact of DeepSeek on the open-source community.

The keynote presentations at the LLVM Developers' Meeting stood out by taking a long-term view of open-source projects. Peter Smith, Principal Engineer at Arm, opened his talk by looking back at the compiler world in 25 BC (Before Clang). He outlined, in technical detail, the 15 years he and his team spent incrementally replacing the proprietary parts of Arm's toolchain with LLVM technology—now offering a fully open-source LLVM-based toolchain using LLVM libc. He spoke about making decisions on migration and maintenance strategies that would impact his team's work for decades to come.

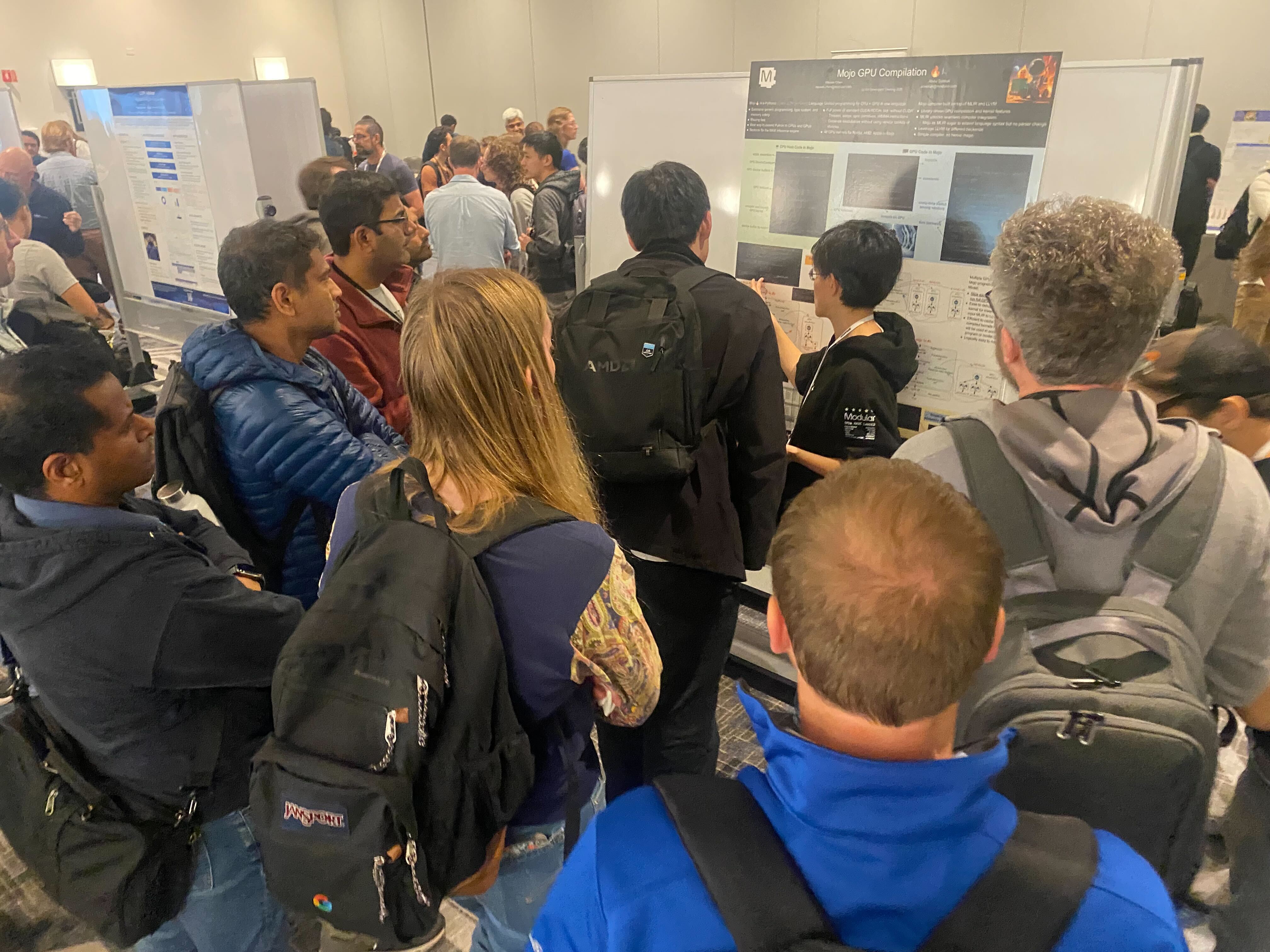

The compiler engineers who crowded around Weiwei Chen's poster session on Mojo GPU Compilation were also thinking long-term. To build software that lasts decades, you can't optimize for a single chip. You need to solve for performance across devices, vendors, and workloads. You need a way to build for both the hardware in front of you and the hardware that doesn't exist yet. This long-term thinking had a notably positive impact on the community. Developers from competing hardware vendors and software teams showed genuine curiosity and enthusiasm for each other's work—they didn't seem focused on who's the biggest fish today, but on building something for the future.

The convergence: same problems, different layers

Attending both conferences back-to-back revealed a clear pattern: whether you're working at the PyTorch AI layer or the LLVM compiler layer, you face similar challenges:

- The two-language problem: PyTorch developers write Python but still need C++ for performance. Compiler developers support high-level languages but must understand low-level hardware.

- Hardware fragmentation: The explosion of AI accelerators—NVIDIA, AMD, Qualcomm, custom ASICs—means both communities spend enormous effort on hardware-specific optimizations across vendors and generations.

- Performance vs. productivity: Researchers want Python's ease of use. Production engineers need C++'s performance. Compiler engineers want portability but need hardware-specific optimizations.

- Velocity vs. stability: AI moves so fast that toolchains struggle to keep up. By the time infrastructure matures for one generation of models or hardware, the next wave has already arrived.

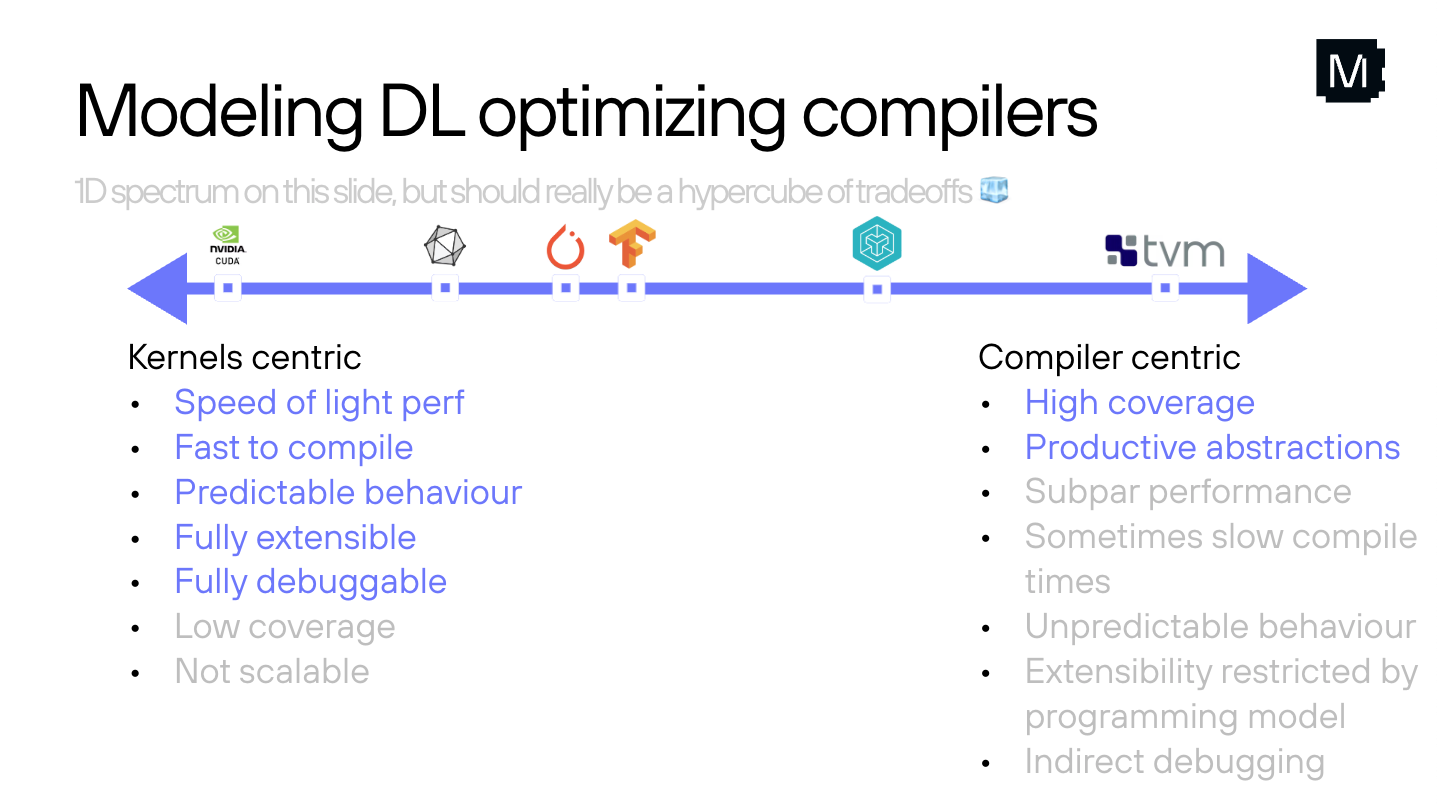

Another way to describe it is a "hypercube of tradeoffs,” as Feras Boulala said in his talk about our MAX JIT Graph Compiler. He uses this term to described the software dilemmas facing deep learning engineers—it’s not just tradeoffs in performance vs. portability and low-level control vs. high-level abstractions, but also tradeoffs in debugging features, extensibility, and many other considerations.

Bridging the gap: an integrated approach

Talking to developers at both conferences, I noticed a pattern: they weren't looking for incremental improvements to existing tools. They were wondering if there was a fundamentally different approach—one that could collapse these tradeoffs rather than force them to choose.

We believe the Modular Platform offers this kind of fundamentally different approach.

At the center of the Modular Platform is the Mojo language. Built upon MLIR from the ground up, it provides a single programming language for the full stack—from high-level AI application code on CPUs down to low-level GPU kernels. At both conferences, we shared how Mojo unlocks programmability, performance, and portability with these sessions:

- "Mojo + PyTorch: A Simpler, Faster Path To Custom Kernels" by Spenser Bauman at PyTorch Conference showed how to write GPU kernels for PyTorch using Mojo, delivering high-performance kernels that are also hardware-agnostic. See the slides and watch on YouTube.

- "Building Modern Language Frontends with MLIR" by Billy Zhu and Chris Lattner at the LLVM event was a technical deep dive on how Mojo leverages MLIR to create a compile-time metaprogramming system for heterogeneous computing. See the slides.

- "Mojo GPU Compilation" by Weiwei Chen and Abdul Dakkak at LLVM was a lightning talk on how Mojo's unique compilation flow enables CPU+GPU programming and efficiently offloads GPU kernels to the accelerator. See the slides.

On top of Mojo is the MAX inference framework, which uses Mojo's powerful metaprogramming and compiler technology to deliver portability across the growing hardware landscape without sacrificing performance. Conference topics included:

- "Modular MAX's JIT Graph Compiler" by Feras Boulala at LLVM was a deep dive on our MLIR-based graph compiler, which leverages unique Mojo features that let AI engineers register high-performance kernels without touching the lower-level compiler infrastructure—while still benefiting from the compiler's support for heterogeneous hardware. See the slides.

- At our PyTorch Conference booth, we shared several demos with the MAX inference server that achieve SOTA performance on both NVIDIA B200 and AMD MI355. You can try it yourself with a local endpoint by following our quickstart guide.

On top of all that is Mammoth, our Kubernetes-native control plane and router designed for large-scale distributed AI serving. It supports multi-model management, prefill-aware routing, disaggregated compute and cache, and more. For details, see how to scale your deployments with Modular.

Looking ahead

The challenges discussed at both PyTorch Conference and the LLVM Developers' Meeting aren't going away—if anything, they're intensifying as AI models grow larger and hardware becomes more diverse. The path forward requires a solution that can:

- Unify developers across backgrounds and skill levels.

- Unify low-level software across frameworks and runtimes.

- Unify hardware across vendors, devices, and use cases.

The conversations at both conferences made one thing clear: the AI community isn't just ready for solutions that reduce fragmentation—they're actively searching for them. The student who asked “where do I start?” and the compiler engineers thinking in decades, not quarters, are all looking for the same thing: tools that can keep pace with innovation without requiring them to constantly rebuild their foundations.

That’s precisely why we built the Modular Platform: it allows you to build and deploy with a unified platform that doesn't ask you to choose between performance, portability, and productivity.

Learn more about our strategy to democratize AI compute.

Discover what Modular can do for you