Given time, budget, and expertise from a team of veterans who’ve built this stack before, Modular set out to solve one of the defining challenges of our era: how to Democratize AI Compute. But what does that really mean—and how does it all add up?

This post is your end-to-end guide. We’ll walk through the technology, the architecture, and the underlying philosophy—before diving deeper into each layer in future posts.

At the heart of it is a singular idea: to democratize AI compute, we need to unify the scattered stars of AI:

- Unify developers—across backgrounds and skill levels.

- Unify low-level software—across frameworks and runtimes.

- Unify hardware makers—across vendors, devices, and use cases.

- Unify an industry of competing interests—who have grown a chaotic software stack that consolidated around one dominant vendor.

For too long, the AI software landscape has been a disconnected starfield—brilliant points of innovation, but hard to navigate, harder to connect, and spreading further apart every year. Modular is building the infrastructure to turn that starfield into a constellation: a coherent system that helps developers chart their path, unites the stars, and unlocks the full potential of AI.

Success in AI isn’t just about how powerful your hardware is, it’s about how many people can use it. That means lowering barriers, opening access, and building software tools that people love to use—not just to run benchmarks.

🌌 The World’s First Unified AI Constellation

Democratizing AI compute is about removing the invisible dark matter that divides the landscape. Today, the stars of AI are scattered across vendor boundaries, siloed software stacks, and outdated abstractions. We all want higher throughput and lower latency and TCO, but AI developers & deployers are forced to choose: a “safe bet for today” or owning your destiny with portability and generality in the future.

At Modular, we believe there’s a better way. One that doesn’t ask developers to compromise: we’re building toward a unified constellation.

Our goal is to expose the full power of modern hardware—NVIDIA’s Tensor Cores, AMD’s matrix units, Apple’s advanced unified memory architecture—not by hiding their complexity, but by building a system that understands it. One that lets developers scale effortlessly across clients, datacenters, and edge devices—without getting lost in a maze of incompatible compilers and fragmented runtimes.

It’s time to move beyond legacy architectures—like OpenCL and CUDA—designed in a pre-GenAI era. CUDA launched the AI revolution, and the industry owes it a great deal. But the future requires something more: a software stack built for GenAI from the ground up, designed for today’s workloads, today’s developers, and today’s hardware and scale.

This constellation can’t be unified by any single hardware vendor: vendors build great software for their chips—but the starry night sky is much broader. It spans NVIDIA, AMD, Intel, Apple, Qualcomm, and others in the hardware regatta ⛵, along with a wave of new stars rising across the AI hardware frontier. We think the industry must link arms and build together instead of fragmenting the galaxy further.

At Modular, we measure success with a simple but ambitious goal:

We want a unified, programmable system (one small binary!) that can scale across architectures from multiple vendors—while providing industry-leading performance on the most widely used GPUs (and CPUs).

That’s what a unified constellation means: Not uniformity—but a coherent, collaborative, and collective momentum. A system that celebrates hardware diversity while empowering developers with a common map—one they can use to build, explore, and reach further than ever before.

🪐 A Galactic Map for AI Compute

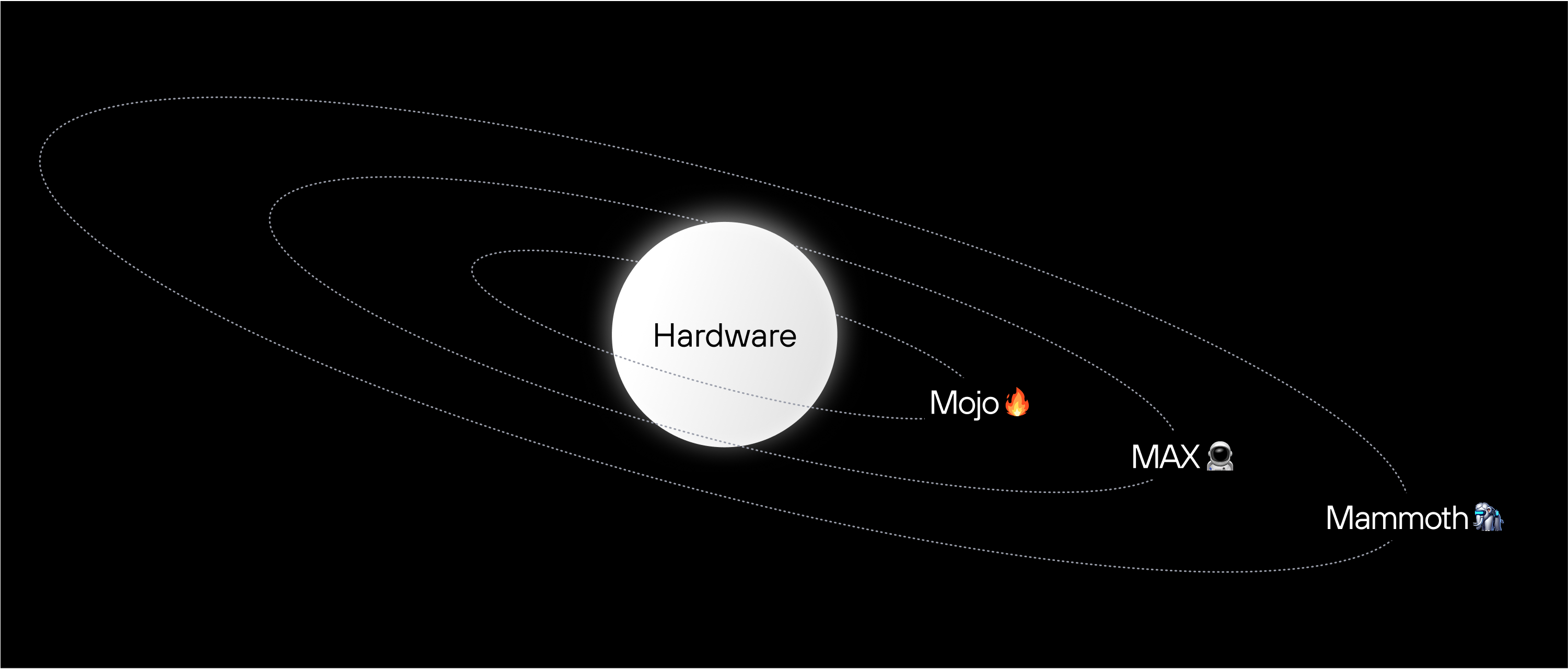

The AI universe is vast—and it’s rare to find two developers who work on exactly the same thing. Some operate near the core, close to the metal. Others orbit further out: building models, deploying inference pipelines, or managing massive GPU fleets. The landscape is fragmented—but it doesn’t have to be.

We designed the Modular Platform to unify this space with a novel, layered architecture: a system that’s powerful when used as a whole, but modular enough to plug into your existing tools like PyTorch, vLLM, and CUDA. Whether you're writing kernels, consolidating your inference platform, or scaling your infrastructure, Modular meets you where you are—and lights the path to where you're going.

Let’s dig into how the layers stack up

Mojo🔥: A Programming Language for Heterogenous GenAI Compute

Mojo is a new language for a GenAI era, designed to solve the language fragmentation problem in AI. Developers love Mojo because it provides the speed and capability of C++, Rust, and CUDA but with familiar and easy-to-learn Python syntax that AI developers demand.

Mojo seamlessly integrates into existing workflows—Mojo files live side-by-side with Python modules with no bindings or extra build tools—while unlocking modern hardware: CPUs, GPUs, and custom accelerators. It offers developers great flexibility and usability, whether it’s crafting advanced GPU kernels like FlashAttention, leveraging Tensor Cores and TMAs, or implementing AI-specific optimizations with low-level control.

Mojo is like the inner planets of a solar system—close to the heat, close to the metal. This is where performance lives and FLOPS go brrrr.

Though Modular is focused on AI, we believe Mojo's ability to accelerate existing Python code opens up high-performance GPU programming to millions more developers, across domains. We aspire for Mojo to be the “best way to extend Python code” for developers in all domains.

MAX 👩🚀: The Modeling and Serving Layer

Orbiting Mojo is MAX—a unified, production-grade GenAI serving framework that answers the natural follow-up to Mojo’s portability: “Why not just build in PyTorch?” MAX goes where PyTorch stops, packaging state-of-the-art inference into a slim 1 GB container that cold-starts fast.

GenAI is about far more than a forward pass. Modern pipelines juggle KV-cache lifecycles, paged attention, speculative decoding, and hardware-aware scheduling. MAX folds all of that complexity into a familiar, PyTorch-like Python API, so you write dynamic graphs while it delivers predictable, fleet-wide performance.

Picture MAX as the massive gas giant in your GenAI solar system. Compute is the central star, and MAX’s deep “atmosphere” of KV-cache handling, paged attention, and speculative decoding provides the gravitational heft that keeps individual AI apps in orderly orbit while letting new models or hardware drift in without turbulence.

Built for use in heterogeneous clusters, a single MAX binary extracts peak throughput from today’s H200’s, B200’s and MI325’s, growing into tomorrow’s MI355’s and B300’s, and even mixed CPU/GPU footprints. Aggressive batching and memory optimizations drive the highest tokens-per-dollar, while the elimination of surprise recompiles and kernel swaps keeps latency steady under spiky loads—turning research notebooks into production-ready GenAI services without sacrificing speed, flexibility, or hardware choice.

Mammoth 🦣: GPU Cluster Management for the GenAI Age

Mammoth is a Kubernetes-native platform that turns fixed GPU footprints—on-prem or in the cloud—into an elastic, high-performance inference fabric.

GenAI has pushed optimizations higher up the stack: modern transformer models split their pre-fill and decode stages across many GPUs, shattering two old cloud assumptions. First, workloads are no longer stateless—chatbots and agents need to preserve conversational context. Second, GPUs can’t be spun up on demand; they’re capacity-constrained assets tied to multi-year commits, so every TFLOP has to count.

Because Kubernetes is already the control plane enterprises trust, Mammoth simply drops into existing clusters and layers on the capabilities teams are missing:

- MAX-aware orchestration lets Mammoth coordinate with MAX for just-in-time autoscaling, intelligent placement of pre-fill and decode nodes, and fast checkpoint streaming.

- Dynamic, multi-hardware scheduling treats a cluster of accelerators from multiple vendors as one resource pool, bin-packing workloads onto the best silicon in real time.

- A unified declarative ops model exposes one API for on-prem and cloud clusters, so platform teams can ditch bespoke schedulers and hand-rolled scripts.

The result is a simple, scalable orchestration layer that lets CIOs embrace heterogeneous hardware without vendor lock-in—while developers stay entirely inside the Kubernetes workflows they already know.

Mammoth is like the spiral arm of the galaxy—an overarching gravitational framework that organizes many solar systems at once. Mammoth’s scheduling gravity aligns each solar system into smooth, predictable rotation, making room for new “stars” or “planets” (hardware and workloads) without ever destabilizing the galactic whole.

While each of these layers—Mojo, MAX, Mammoth—can stand on its own, together they form a coherent galactic map for GenAI compute: scalable, reliable, and portable across hardware and time.

💠 High Performance Models and Kernels

The Modular Platform is more than a CUDA-replacement—it’s a launchpad that meets two very different personas right where they work:

- AI engineers & MLOps teams want production-ready assets. We ship complete, open-source model pipelines pre-tuned for speed and packaged in a ~1 GB container that run unchanged on CPUs and NVIDIA or AMD GPUs.

- AI researchers & kernel hackers crave low-level control. Our GitHub repo at

modular/modularexposes hand-optimized GPU kernels—FlashAttention, paged attention, KV-cache orchestration, speculative decoding—written in Mojo so you can tweak internals or invent entirely new operators without rewriting the stack.

Because every model and kernel sits on a common runtime, you can start fast with proven building blocks and dive deep only when you need to. The result is the largest coherent library of portable, open-source AI components anywhere—powerful enough for enterprise teams that just want to ship, yet modular enough for researchers pushing the frontier.

Picture these model pipelines as comets that soar around of the solar system—the content that gives the infrastructure meaning.

Open source remains the bedrock of AI progress; a unified ecosystem ensures you can start with something powerful and go further than ever before—whether that means shipping a feature on Monday or publishing a paper on Friday.

🏛️ An Expanding Hardware Constellation

Truly democratizing AI compute requires the ability to scale into far more hardware than any team could individually support—it requires an industry coalition and experts in the hardware to drive best-possible support for their silicon.

Hardware diversity should be the foundation of the modern AI universe, not a problem. More choice and specialized solutions will drive more progress and products into the world.

The Modular stack was specifically designed to scale into a wide range of different accelerators, giving hardware innovators control over their performance and capabilities. Now that Modular can prove portability across multiple industry standard GPUs from leaders like NVIDIA and AMD, we would like to open up our technology platform to far more hardware partners.

We don’t have all the details figured out yet though! If you are part of a hardware company and interested to learn more, please get in touch and we’ll reach out at the right time. If you are an AI developer and would like expanded support for new hardware, please ask that hardware team to reach out to us!

📋 The Mission Checklist

A new AI platform can’t just be clever or well-intentioned—it has to ship and work. Modular's work will never be done, but we can now show real progress on every dimension we believe is critical to Democratizing AI Compute.

Here’s how we judge the Modular Platform against the scorecard we’ve used in this series to evaluate other systems:

- 🚤⛵🛳️🚢 Enable portability across hardware from multiple vendors: Compute is already diverse with many participants, and Modular has demonstrated the ability to scale from CPUs to NVIDIA and to AMD, all from a single unified binary—an industry first. ✅ Modular’s stack is designed to support ASIC’s and more exotic systems, but still needs to prove that. ⚠️

- 🚀 Run with top performance on the industry leader’s hardware: NVIDIA makes great hardware, has the most widely deployed datacenter footprint, and is the most widely used by enterprises. Modular delivers peak performance on NVIDIA’s powerful Hopper and Blackwell architectures, not just alternative hardware. ✅

- 🔧 Provide a full reference implementation: Modular ships a complete, production-grade stack that you can download today: a language, a framework, a runtime, and a Kubernetes-scale system. This isn’t a whitepaper or committee spec—it’s real software you can run in production. ✅

- ⚡ Evolve rapidly: AI moves fast—we move faster. Modular ships major updates every 6–8 weeks, and we’ve brought up complex platforms like H200 and AMD MI325X in record time. This velocity is only possible because of three years of deep tech investment. ✅

- 💻 Cultivate developer love: We build for developers—clean APIs, deep control, and tools that scale from hobby projects to HPC. We’re opening more of the stack every month, and we’re engaging directly through forums, Discord, hackathons, and events. ✅

- 🌐 Build an open community: Modular is vastly open source: hundreds of thousands of lines of high-performance models, kernels, and serving infrastructure. This is the largest portable and open AI GPU stack available today. ✅

- 🧩 Avoid fragmentation across implementations: We embrace openness—but anchor it in a single, stable release process. This gives the ecosystem confidence, avoids version nightmares, and provides a reliable foundation that runs across CPUs and GPUs alike. ✅

- 🛠️ Enable full programmability: No black boxes. Mojo gives you deep control, from low-level GPU kernels to high-level orchestration, all with Pythonic clarity. Modular layers work together—but remain programmable and composable on their own. ✅

- 🦾 Provide leverage over AI complexity: Today’s challenge isn’t just FLOPS—it’s complexity at scale. Modular brings the “best of” in GenAI systems together into one place: compiler, language, and cluster orchestration. ✅

- 🏗️ Enable large-scale applications: Modular isn’t just for benchmarks—it’s for production. Stateful workloads, intelligent scheduling, and resource orchestration are first-class citizens. ✅

- 🧭 Have strong leadership and vision: We’ll let our track record speak for itself. Modular is setting an ambitious course and shipping major milestones. The path ahead is long, and we’re committed to charging into it. ✅

Each goal is ambitious on its own. Together, they define what a true successor to CUDA must deliver. Modular is well on its way—but we don’t support all the world’s hardware and we know that heterogeneous compute has a future far beyond AI.

Democratizing AI compute is a galactic-scale mission—far too ambitious for any one company alone. We as an industry need to continue to come together to solve this problem as a consortium.

Stay tuned for Mojo🔥: Tackling xPU Programmability

This post laid out the big picture—a galactic map 🗺️ of Modular’s architecture and mission. But to understand how it all works, we have to start at the core.

In the next post, we’ll descend from the star clusters back toward the inner planets with Mojo: the foundation of Modular’s stack, and our boldest bet. It’s a new kind of programming language—designed to give developers deep, precise control over modern hardware, without giving up the clarity and flexibility of Python. It’s where performance meets programmability, where the hardware burns hot, truly where the magic begins.

“The future is already here — it’s just not evenly distributed.” — William Gibson

Until then, may your GPU fleets chart safe paths through the star systems—without falling into the black hole of complexity.

-Chris