Blog

Democratizing AI Compute Series

Go behind the scenes of the AI industry with Chris Lattner

How to Beat Unsloth's CUDA Kernel Using Mojo—With Zero GPU Experience

Traditional GPU programming has a steep learning curve. The performance gains are massive, but the path to get there (CUDA, PTX, memory hierarchies, occupancy tuning) stops most developers before they start. Mojo aims to flatten that curve: Python-like syntax, systems-level performance, no interop gymnastics, and the same performance gains.

🔥 Modular 2025 Year in Review

Our four-part series documenting the path to record-breaking matrix multiplication performance became essential reading for anyone serious about LLM optimization. The series walks through every optimization step—from baseline implementations to advanced techniques like warp specialization and async copies—showing you exactly how to extract maximum performance from cutting-edge hardware.

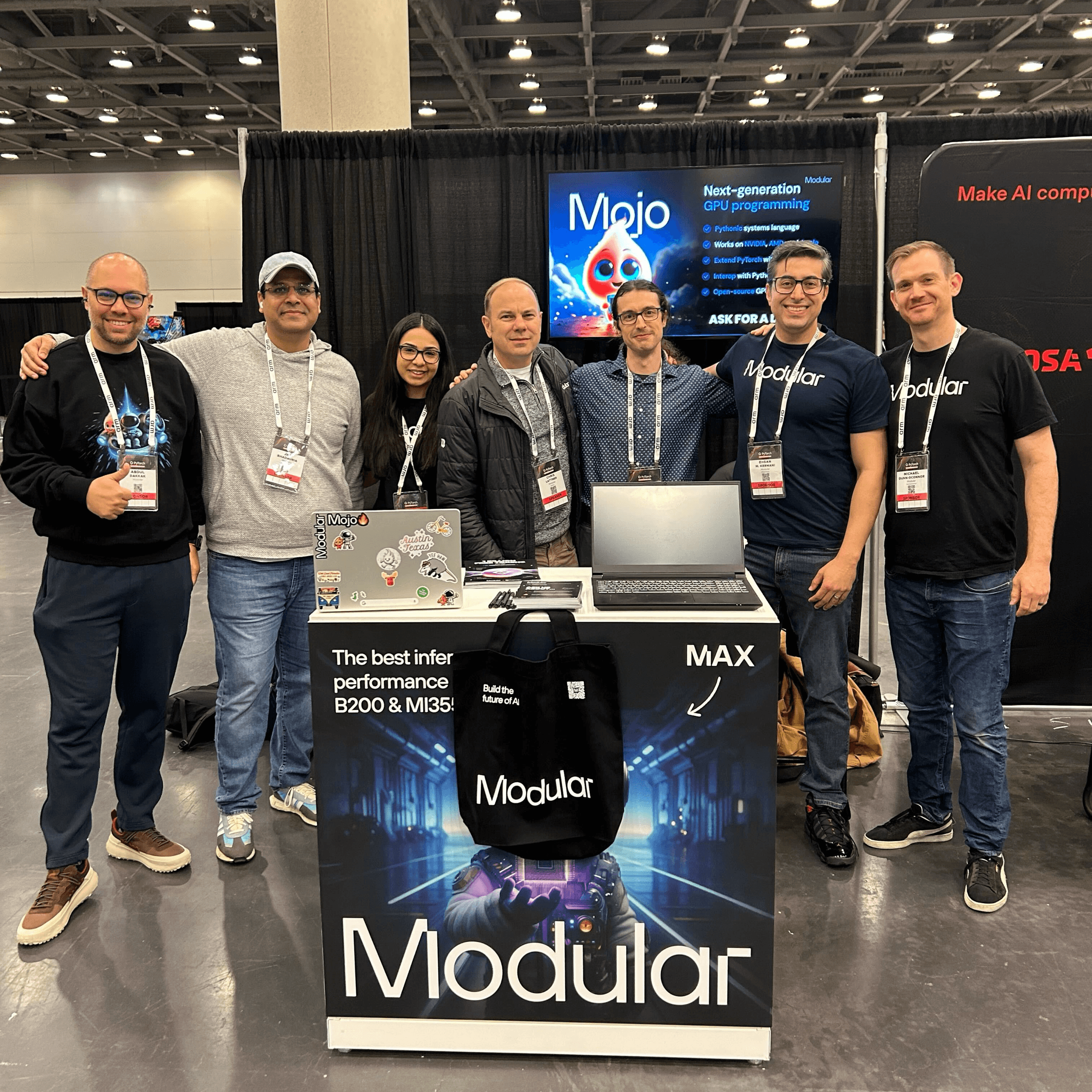

PyTorch and LLVM in 2025 — Keeping up With AI Innovation

Along with several teammates, I had the privilege of attending two recent developer events in the AI software stack: PyTorch Conference 2025 (October 22-23) in San Francisco and LLVM Developers' Meeting (October 28-29) in Santa Clara. In this post, I’ll share some observations that stood out among all the conference sessions and conversations I had with developers.

.png)

Modverse #51: Modular x Inworld x Oracle, Modular Meetup Recap and Community Projects

The Modular community has been buzzing this month, from our Los Altos Meetup talks and fresh engineering docs to big wins with Inworld and Oracle. Catch the highlights, new tutorials, and open-source contributions in this edition of Modverse.

Modverse #50: Modular Platform 25.5, Community Meetups, and Mojo's Debut in the Stack Overflow Developer Survey

This past month brought a wave of community projects and milestones across the Modular ecosystem!Modular Platform 25.5 landed with Large Scale Batch Inference, leaner packages, and new integrations that make scaling AI easier than ever. It’s already powering production deployments like SF Compute’s Large Scale Inference Batch API, cutting costs by up to 80% while supporting more than 15 leading models.

Modverse #49: Modular Platform 25.4, Modular 🤝 AMD, and Modular Hack Weekend

Between a global hackathon, a major release, and standout community projects, last month was full of progress across the Modular ecosystem!Modular Platform 25.4 launched on June 18th, alongside the announcement of our official partnership with AMD, bringing full support for AMD Instinct™ MI300X and MI325X GPUs. You can now deploy the same container across both AMD and NVIDIA hardware with no code changes, no vendor lock-in, and no additional configuration!

Modverse #48: Modular Platform 25.3, MAX AI Kernels, and the Modular GPU Kernel Hackathon

May has been a whirlwind of major open source releases, packed in-person events, and deep technical content!We kicked it off with the release of Modular Platform 25.3 on May 6th, a major milestone in open source AI. This drop included more than 450k lines of Mojo and MAX code, featuring the full Mojo standard library, the MAX AI Kernels, and the MAX serving library. It’s all open source, and you can install it in seconds with pip install modular, whether you’re working locally or in Colab with A100 or L4 GPUs.

Democratizing Compute Series

Go behind the scenes of the AI industry in this blog series by Chris Lattner. Trace the evolution of AI compute, dissect its current challenges, and discover how Modular is raising the bar with the world’s most open inference stack.

Matrix Multiplication on Blackwell

Learn how to write a high-performance GPU kernel on Blackwell that offers performance competitive to that of NVIDIA's cuBLAS implementation while leveraging Mojo's special features to make the kernel as simple as possible.

No items found within this category

We couldn’t find anything. Try changing or resetting your filters.

Get started guide

Install MAX with a few commands and deploy a GenAI model locally.

Read Guide

Browse open models

500+ models, many optimized for lightning-fast performance

Browse models

.png)

.jpeg)