What’s New in Mojo 24.3: Community Contributions, Pythonic Collections and Core Language Enhancements

Mojo🔥 24.3 is now available for download and this is a very special release. This is the first major release since Mojo🔥 standard library was open sourced and it is packed with the wholesome goodness of community contributions! The enthusiasm from the Mojo community to enhance the standard library has been truly remarkable. And on behalf of the entire Mojo team, we’d like to thank you for all your feedback, discussion and, contributions to Mojo, helping shape it into a stronger and more inclusive platform for all.

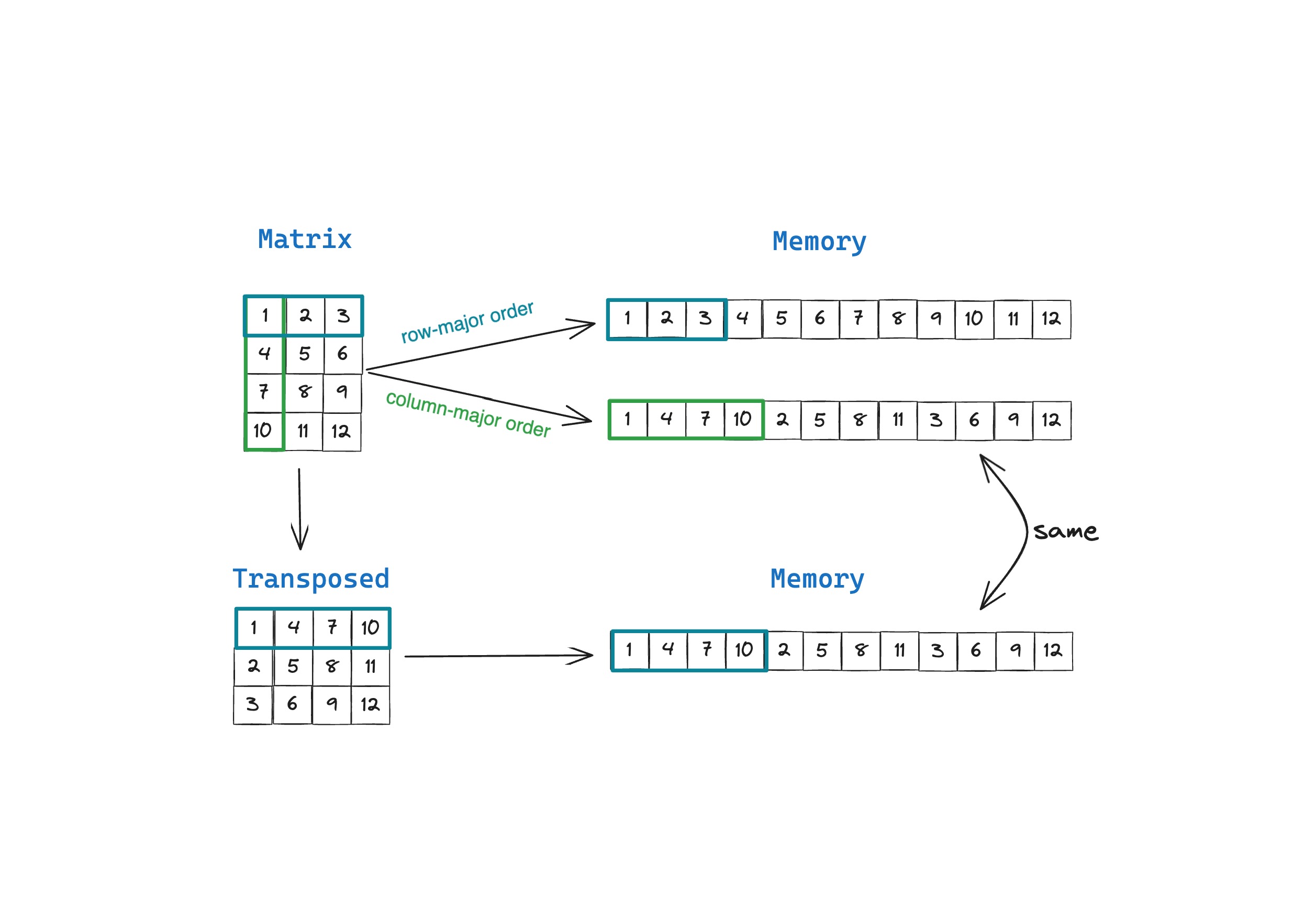

Row-major vs. Column-major Matrices: A Performance Analysis in Mojo and NumPy

A matrix is a rectangular collection of row vectors and column vectors that defines linear transformation. A matrix however, is not implemented as a rectangular grid of numbers in computer memory, we store them as a large array of elements in contiguous memory.

What’s new in Mojo 24.2: Mojo Nightly, Enhanced Python Interop, OSS stdlib and more

This will be your example-driven guide to Mojo SDK 24.2, as part of the latest MAX release. If I had to pick a name for this release, I’d call it MAXimum⚡ Mojo🔥 Momentum 🚀 because there is so much much good stuff in this release, particularly for Python developers, adopting Mojo.

The Next Big Step in Mojo🔥 Open Source

At Modular, open source is ingrained in our DNA. We firmly believe for Mojo to reach its full potential, it must be open source. We have been progressively open-sourcing more of Mojo and parts of the MAX platform, and today we’re thrilled to announce the release of the core modules from the Mojo standard library under the Apache 2 license!

Semantic Search with MAX Engine

In the field of natural language processing (NLP), semantic search focuses on understanding the context and intent behind queries, going beyond mere keyword matching to provide more relevant and contextually appropriate results. This approach relies on advanced embedding models to convert text into high-dimensional vectors, capturing the complex semantics of language.

How to Be Confident in Your Performance Benchmarking

Mojo as a language offers three main benefits, namely the 3 P’s: Performance, Programmability and Portability. It enables users to write fast code, do so easier than many alternative languages, and allows code to be run across different CPU platforms, with GPU support on the roadmap.

Mojo🔥 ❤️ Pi 🥧: Approximating Pi with Mojo🔥 using Monte Carlo methods

March 14th aka 3/14 or 3.14 is known as $\pi$ Day, and it honors the mathematical constant $\pi$ (pi), which represents the ratio of a circle's circumference to its diameter. On this special day, I wanted to dedicate a blog post to the beauty of mathematics, numerical methods, $\pi$, and Mojo. So join me on this journey as I implement a fast vectorized Monte Carlo approximation method of calculating $\pi$. Happy $\pi$ Day!

Evaluating MAX Engine inference accuracy on the ImageNet dataset

MAX Engine is a high-performance AI compiler and runtime designed to deliver low latency, and high-throughput inference for AI applications. We've shared how you can get started quickly with MAX in this getting started guide, and how you can deploy MAX Engine optimized models as a microservice using MAX Serving.

Optimize and deploy AI models with MAX Engine and MAX Serving

MAX Developer Edition preview is now available to developers worldwide and ICYMI, feel free to check out our getting started with MAX blog post. Today, I’d like to dive a little deeper and show how to build an end-to-end application using MAX.

Start building with Modular

Easy ways to get started

Get started guide

With just a few commands, you can install MAX as a conda package and deploy a GenAI model on a local endpoint.

Browse open source models

Copy, customize, and deploy. Get your GenAI app up and running FAST with total control over every layer.

Find Examples

Follow step by step recipes to build Agents, chatbots, and more with MAX.